Stop doing security

the ‘right’ way

Introduction

More than 75% of reported security incidents are caused by software mistakes, such as information leakage, execution of unauthorised transactions or service unavailability. These can lead to significant business damage both in reputational as well as in financial terms (by an order of magnitude of millions of dollars).

Organisations and governments invest a remarkably high amount of money in cyber security in order to protect their sensitive information and systems from unauthorised activities, of course, as it can be seen on the aforementioned articles, not always with the expected success.

However, even though the software development process has moved towards an “Agile” and faster feedback way of working, security most of the time still follows the traditional “Waterfall” step-after-step style. Mass effort and budget are spent to ensure software security on the last steps of the SDLC (Software Development Life Cycle) process, mainly via penetration tests. However, by integrating it in the prior steps including designing and development, we strongly believe effort and budget will be reduced, by at least 50%, and vulnerabilities will be significantly decreased.

Considering that most of the issues originate from the software itself, it is important that developers understand the notion and value of security and make it an integral part of their work throughout the process. They should be aware of the risks posed while writing code in order to be able to prevent them as soon as possible.

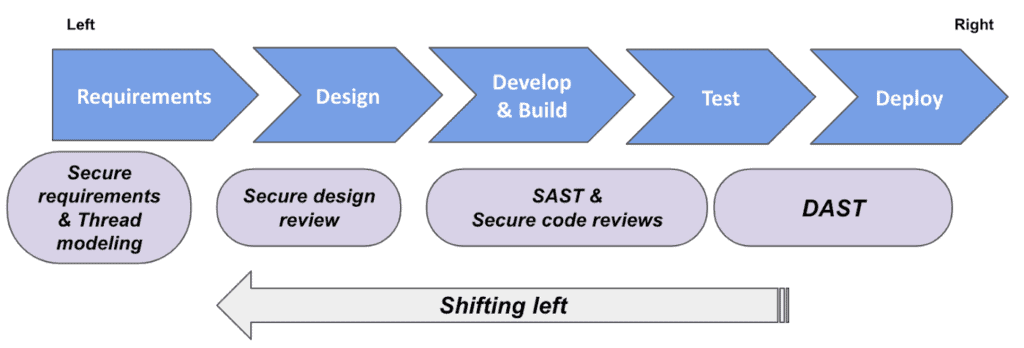

Shifting left on security is a practice that suggests exactly that. A system is designed, developed, tested and deployed with security in mind (security by design), introducing the concept as early as possible into the “Develop & Build” phase of the SDLC. This does not only consist of a “tools and processes” change, but a cultural one with significant benefits, such as saving time and money for organisations as well as identifying and resolving more weaknesses that may lead to security vulnerabilities.

In this article I will deep dive into the Dos and Don’ts of this approach based on the experiences we’ve accumulated by looking into tenths of systems and development teams of large organisations.

The traditional approach of securing software systems

Users want to ensure that their data are securely stored and their transactions are protected from malicious users. When vulnerabilities occur, the users will lose trust in the product and the organisation that develops it and this can have serious business implications. Therefore, focusing on security, especially for web and mobile applications, which are the majority of software products nowadays, is a very, if not the most important, non-functional requirement.

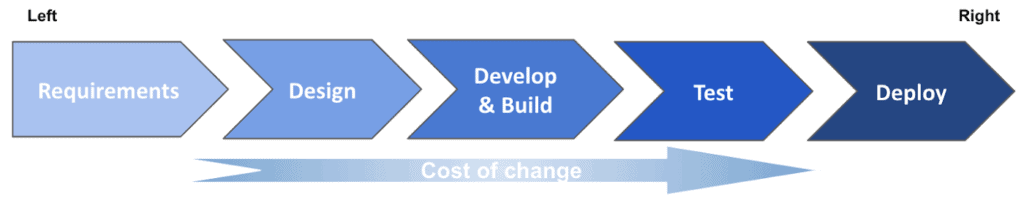

To warrant the security of their systems, organisations introduce tools and processes that test for potential vulnerabilities in an application when systems have been already designed, developed and tested, before going into production (right side of the SLDC diagram below). The findings then go back to the development team (left side of SDLC) who implements the proper fixes.

This kind of black box tests, meaning tests that are executed in a running application without having visibility on the code, are known as Dynamic Application Security Testing (DAST) or Penetration tests.

Performing penetration tests is probably the most realistic way to discover potential security vulnerabilities on a running application since it reveals the actual attack surface in the given moment. Furthermore, such tools and processes are not affected by the programming languages used for development or design. This is also the reason why DAST is in the last (right) part of the SDLC where processes have already moved away from the design and code to deployable artefacts.

What we observe is that organisations, especially the traditional ones, depend mostly, if not solely, in penetration tests for their security underestimating the value of spending time on secure coding and believing that all vulnerabilities can be discovered in the testing phase

Problems of DAST

First of all, even though there are some “agile penetration testing” solutions out there via DAST tools in the CI pipelines, the manual DAST executed by pen-test experts provides higher confidence in findings. However, this is not an “agile” method considering these run once at the end of the testing phase just before deploying the application. Being a non-agile methodology, manual DAST comes with inherited risks: The later the issues are discovered, the slower and more expensive it is to fix them. As a result, deadlines are missed or even worse, security is compromised.

Secondly, since DAST is executed in a running application, weaknesses and vulnerabilities that are hidden behind hardware (e.g. firewalls) or network architecture (e.g. VPNs) cannot be discovered. Lacking “defence in layers” can lead to security breaches either by an external attacker who managed to get in the internal network, something we see quite often, or by an organisation’s malicious employee.

Another perspective that is completely opaque via DAST is the security best practices followed by the developers and whether the software follows the Security by Design principle. Some of the potential risks that can not be identified by DAST and Penetration tests are:

- Vulnerabilities that are inherited from 3rd party components,

- Business logic issues that can be misused for exploiting vulnerabilities,

- Correct and centralised implementation of authorisation and authentication mechanisms,

- Sensitive data leakage from logs, which people from the organisation have access to,

- Inadequate logging and monitoring mechanisms that can be used for evidence in case of critical events,

- and more insecure constructs in the system that are not directly exploitable today, but may become exploitable in the future.

Problems on culture

Furthermore, from our interactions with development teams we observe that the problem is even deeper, it is in their culture. A few common problems observed from an organisational culture perspective are:

- Lack of ownership on security subjects,

- Insufficient knowledge of common application security risks such as OWASP’s top 10,

- Lack of collaboration between developers and Information Security teams by engaging the latter too late in the SDLC,

- Copying from forums and other online sources such as stackoverflow, being full of insecure examples.

Shifting left on security

The idea of shifting left on security means integrating security as early as possible into the SDLC making it everyone’s responsibility. As discussed, resolving security issues at the beginning is much cheaper and builds a system with integrated security at its core. Most importantly, by integrating security from the start organisations build a culture around security awareness, creating a shared responsibility on the subject among all the participants of the SDLC.

6 steps to “Security by Design”

The arrangement of steps starts by building awareness of security concepts, then defining standards and principles, followed by tools and processes that check their adherence, which eventually lead to the cultural shift of the organisation towards security by design.

First, considering that security requires specific knowledge and is usually overlooked, developers need to be aware of security principles. The best way to retrieve such knowledge is via security trainings in both general secure coding principles (i.e. denying access by default or use defence in layers) and technology or framework specific concepts (i.e preventing XSS in JSP).

Second, security policies and procedures need to be in place that explicitly define: The proper choice of programming languages & frameworks, a set of predefined packages and libraries as well as a strategy on selecting and upgrading them.

Third, maintainable code and design support faster resolution of vulnerabilities by making it easier for developers to read, understand, change and test the code. For example, having vulnerable dead code in the codebase does not prevent team members from (re)using it. Also, duplicating (copy/paste) code means also duplicating security issues and this eventually leads to a larger attack surface

Fourth, considering that there are a lot of aspects that developers have to consider, it is suggested adopting a secure coding standard. This would be a specific set of agreed practices and principles that Information Security and Application Development teams can fall back on when needed. An example of a generic secure coding standard is OWASP’s Application Security Verification Standard. There are also technology specific standards e.g. for Java, Angular etc. These standards will help security service and tools’ vendors as well as internal consumers and procurement departments to align their requirements and offerings. Once secure coding standards are in place, those should be checked for adherence via secure architecture and coding reviews performed by experienced developers or experts in the field.

Fifth, Tooling and Automation is an integral part of agile software development and continuous delivery, which helps saving significant time and effort on repetitive tasks, prevents human errors, provides faster feedback and acts as a quality gate for many functional and non-functional requirements including security. There are 4 main types of tools to perform security checks:

- Dynamic Application Security Testing (DAST) tools, which are tools that automatically perform black box tests in the deployed application. Regarding its frequency, such tools run once before the system goes live. Advantages and disadvantages are mentioned in the previous sections of this article.

- Static Application Security Testing (SAST) tools, which are used to identify and report on security vulnerabilities in source code through static analysis. SAST can run during the development phase of the software with very high frequency, e.g. on each commit, providing very fast feedback to the developers. Examples of these vulnerabilities can be input validation and potential injection vulnerabilities, numerical errors, path traversals, exposure of sensitive data and more. The most significant disadvantages of SAST are: the fact that they need heavy customisation in order to perform the analysis and the vast number of false positives, which takes notable time to review and needs to be performed by a security expert.

- Software composition analysis (SCA) tools that analyse the 3rd party components used by the application under development. As with SAST, SCA tools also can run on every commit for fast feedback. Developers, in order to avoid reinventing the wheel, reuse external components developed by other developers and published as open-source software. A recent example is the Log4Shell vulnerability in Log4j that affected 93% of enterprise cloud environments including large corporations and governments. SCA tools can be used to identify known vulnerabilities in those components, e.g. according to the NIST CVE database and if those components need to be patched or should be replaced.

- And other hybrid methods like Interactive Application Security Testing (IAST).

Sixth, involve the Information / Application Security teams in the design and development process is crucial. An idea would be to build a “Purple team”, the intersection of the “Red team” (team that performs attacks e.g. penetration testers / Information Security) and the “Blue team” (team that implements defences e.g. the developers). If the Information Security team is involved in the design process of a new feature, they can help map out applications by sensitivity, and investigate the key entry points an attacker can use to compromise the application. This way the development team can anticipate the potential vulnerabilities. Finally, security and development teams should work together by proactively engaging in conservation, collaboration and knowledge exchange.

Through iterations of this learning and collaborative process, continuous improvement is expected e.g. via the OWASP’s Software Assurance Maturity Model. This last point creates a cultural shift of making security a shared responsibility, also known as DevSecOps.

Conclusion

Security is probably the most critical non-functional requirement for software products due to its significant business impact; yet from our observations we see that it is handled in a very old-fashioned / waterfall way. Shifting left moves security to earlier stages of the software development processes and makes it the responsibility of all involved parties.

Over the years application security has matured significantly. All the digital native companies (the Silicon Valley cool kids) have been doing security by design for a while proving its contribution to safer, faster and cheaper software development. Lately we notice a tendency in the industry and even from traditional organisations that consider integrating security practices in development especially when they perform their “digital transformation” or “cloud migration” processes.

Even though it may seem like a novel concept for software developers, it isn’t. Actually, there is a plethora of tools and processes to help them and most importantly established guidelines and standards. This can lead to the ultimate goal of creating a culture for security alongside the whole SDLC and building more secure systems from the inside-out.